Google, Oliver Wyman and Corridor Platforms

Banks have been utilizing generative AI of their name facilities, software program growth, funding analysis and again places of work. Some are even making it accessible to their total worker base.

But for probably the most half, U.S. banks have been cautious of providing a gen AI-driven chatbot to their prospects. The dangers are grave: Large language mannequin bots could be inappropriate or poisonous, they will present misinformation and so they can hallucinate.

In an initiative they’re launching Monday, cloud supplier Google, consultancy Oliver Wyman and AI testing platform supplier Corridor Platforms are providing banks a accountable AI sandbox to check using any gen AI, with recommendation accessible from Oliver Wyman advisors. The sandbox shall be free for three months. (Separately, Corridor’s platform can run in any cloud atmosphere.)

“The concept is, in a 3 month interval, they actually get an excellent sense of precisely what must be executed for their use case to go stay and what governance is required, and ultimately, get consolation that it’s accountable and an excellent factor for their buyer, and hopefully will even meet regulatory muster,” stated Manish Gupta, founder and CEO of Corridor Platforms and former know-how chief at American Express.

The sandbox addresses some financial institution rules, Gupta stated. For occasion, it supplies bias assessments, accuracy assessments and stability assessments.

At the tip of the three-month interval, firms will be capable to transfer the newly skilled gen AI fashions to their very own environments. “We will make it very transportable,” Gupta stated.

Sandbox idea gaining traction

The largest, most subtle banks may construct their very own sandboxes, and a few are doing so.

“Tier 1 banks have been utilizing sandboxes with good outcomes – for instance, HSBC and JPMorganChase,” Alenka Grealish, a lead analyst at Celent, instructed American Banker.

The benefit for banks of utilizing a third-party-provided sandbox to check AI instruments, relatively than doing their very own testing in-house, is that “it will get them most likely three years forward on the journey, as a result of the Corridor group has constructed out the capabilities which are wanted,” stated Dov Haselkorn, a guide who till a month in the past was chief operational danger officer at Capital One. “Therefore, you possibly can leverage the R&D and the thought and the event that they’ve been placing into it for years now in a single day. And velocity is de facto of the essence, particularly on this area, the place all people is preventing to roll out new capabilities instantly. Sure you may construct it, but when it’ll take you three years to construct it, you are going to be behind the curve.”

Capital One isn’t utilizing the accountable AI sandbox but, however the financial institution has had discussions with the businesses behind it, Haselkorn stated.

In addition to bettering velocity, use of a sandbox may additionally mitigate danger, such because the hazard of a chatbot saying one thing improper to a buyer, Haselkorn stated.

“Data danger and third-party information sharing danger are extraordinarily related to this kind of use case,” Haselkorn instructed American Banker. “Data provenance is a serious subject on this subject. So for those who’re constructing your personal fashions, particularly for those who’re utilizing web-scraped information or information from extra lately established datasets which are generally used to develop open supply fashions, there could be compliance questions with using copyrighted information. If you supply information from, say, Bloomberg, you are allowed to make use of it for some issues, you are not allowed to make use of it for different issues. You cannot flip round and commercialize that information, for instance.”

In his earlier position at Capital One, Haselkorn put plenty of effort into ensuring the corporate was complying with its personal agreements, in addition to defending buyer information. “If we’re utilizing our personal buyer information to construct our personal fashions after which we’re deploying fashions on platforms like Corridor’s, we’ve to ensure that these controls are in place to ensure that none of our buyer information by chance leaks to 3rd events.”

How the brand new sandbox will work

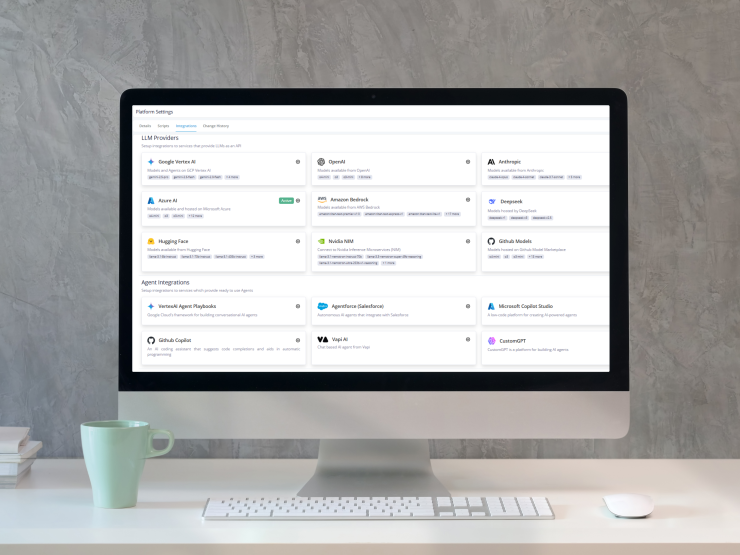

To begin with, the accountable AI sandbox will comprise a model of Google’s Gemini generative AI mannequin, skilled in customer support, that bankers can check inside Google Cloud. Bankers also can swap in different gen AI fashions, connect with inner or exterior information sources through API and see how these fashions would deal with their buyer questions.

“In this industrial revolution for data staff, the trade must learn to management” the rising adoption of generative AI of their companies, stated Michael Zeltkevic, managing companion and international head of capabilities at Oliver Wyman. This requires fixing arduous engineering issues, similar to how you can take care of toxicity, he stated. The sandbox provides bankers who aren’t well-versed in generative AI the prospect to have interaction with it in a protected area, Zeltkevic and Gupta stated.

Oliver Wyman will provide banks assist with utilizing the sandbox and getting gen AI fashions into manufacturing in their very own information facilities and cloud situations.

An analogous sandbox within the UK

The AI sandbox concept has been well-liked in different nations. For occasion, within the U.Okay., the banks’ regulator, the Financial Conduct Authority, stated in September it could launch a “Supercharged Sandbox” to assist companies experiment safely with AI to assist innovation, utilizing NayaOne’s digital sandbox infrastructure, which runs on Nvidia {hardware}. “This collaboration will assist those who need to check AI concepts however who lack the capabilities to take action,” stated Jessica Rusu, the FCA’s chief information, intelligence and data officer.

“The U.Okay.’s Financial Conduct Authority has acknowledged the worth of leveling the taking part in subject by providing an AI sandbox,” Grealish stated.

The FCA sandbox has acquired about 300 functions, in accordance with Karan Jain, CEO of NayaOne.

One motive firms use NayaOne’s sandbox is to coach an AI mannequin whereas holding information from being shared with third events, Jain stated. For occasion, one insurer makes use of the platform to coach an AI mannequin with its claims photographs.

“There’s no method an insurance coverage firm sends declare information to an AI firm, and there is not any method an AI firm provides its mannequin to an insurance coverage firm,” Jain stated. “They introduced the info and the AI mannequin into NayaOne, we locked it down, and we supplied the GPUs wanted to do this work. We even have all of the Nvidia software program accessible via our platform.”

U.Okay. banks use the FCA digital sandbox for such functions as fraud detection and cybersecurity, Jain stated.

The 4 huge enterprise generative AI use circumstances NayaOne sees in its sandboxes exterior the FCA initiative are aiding customer support representatives in touch facilities, aiding builders in writing and updating code, back-office effectivity (for occasion, utilizing it to extract information from PDFs), and compliance checks.

“You have a safe atmosphere the place information would not leak,” Jain stated. “And you possibly can deliver one particular person or a number of groups collectively to be taught, adapt, construct — the place the trade is caught proper now, for my part.”

One financial institution spent 12 months onboarding AI coding assistant Lovable and making it accessible to a small group of individuals, Jain stated. By the time it was prepared for use, newer coding assistants like Cursor had come out.

“That’s a very sensible problem that banks are going through: The velocity of adoption of know-how is 10 occasions slower than the velocity of know-how that is getting into the market and the workers need it,” Jain stated.